I have previously blogged about synching tables with different Primary keys (see: Part 1 – Upload Synchronization where the Client and Server Primary Keys are different and Part 2 – Bidirectional Synchronization where the Client and Server Primary Keys are different ) and in both approach, we relied on intercepting the change dataset to alter the structure of the table being synched by adding/removing columns to match the Primary key of the destination table.

In the last couple of days, I have received questions around the same scenario. The last one is as follows:

In my case I have a database where the majority of the PK’s are identity autoincrementing ints.

we are now in a position were we need to make this data available in a distributed environment (i.e mobile devices, external software etc) and of course kept in sync.

As I cant change from using the current PK sync I was thinking that i could add a GUID to the columns which would be used as sync.

on the client I have complete control over how that database structure is created so I was thinking there i could have the GUID as the identifying key

on first sync the server would send across the required data (and GUID).if the client wants to do inserts they can do and then sync (sending across GUID) – although server would auto increment the PK (but we can still match on the GUID for future syncs).

Does this sound like the right solution and if so can the Microsoft Sync framework handle this task?

I promised I’d write a blog post to see if it could be done or at least come up with something similar.

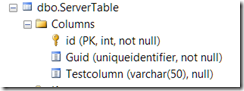

Let’s assume we have the following table structures:

As you would notice, the server table has its PK on the Id column which is setup as an Identity column as well. The client table on the other hand has its Guid column as its PK. In this scenario the Guid is used to uniquely identify the client rows whereas in my previous posts, we used a Guid to uniquely identify clients.

What if we can “trick” Sync Framework into thinking that the PK on the server table is the Guid column instead of the identity column Id? Which means that as far as the physical table is concerned, Id remains the PK, but inside our scope definition, the PK is the Guid column.

So I wrote a quick test to check if this is possible. See the following code:

- using System;

- using System.Data.SqlClient;

- using Microsoft.Synchronization;

- using Microsoft.Synchronization.Data;

- using Microsoft.Synchronization.Data.SqlServer;

- namespace SyncFxForums

- {

- class Program

- {

- static void Main()

- {

- //setup the connections

- var serverConn = new SqlConnection(“Data Source=localhost; Initial Catalog=SyncFxLabs; Integrated Security=True”);

- var clientConn = new SqlConnection(“Data Source=localhost; Initial Catalog=SyncFxClient; Integrated Security=True”);

- const string scopeName = “DifferentPKScope”;

- //provision server

- var serverProvision = new SqlSyncScopeProvisioning(serverConn);

- if (!serverProvision.ScopeExists(scopeName))

- {

- var serverScopeDesc = new DbSyncScopeDescription(scopeName);

- var serverTableDesc = SqlSyncDescriptionBuilder.GetDescriptionForTable(“ServerTable”, serverConn);

- // our server and client tables has different names, so let’s setup a common name

- serverTableDesc.GlobalName = “TestTable”;

- serverScopeDesc.Tables.Add(serverTableDesc);

- // remove the identity column PK since we dont need to sync it

- serverScopeDesc.Tables[“TestTable”].Columns.Remove(serverScopeDesc.Tables[“TestTable”].Columns[“id”]);

- // “trick” Sync Fx that Guid is the PK

- serverScopeDesc.Tables[“TestTable”].Columns[“Guid”].IsPrimaryKey = true;

- serverProvision.PopulateFromScopeDescription(serverScopeDesc);

- serverProvision.Apply();

- }

- //provision client

- var clientProvision = new SqlSyncScopeProvisioning(clientConn);

- if (!clientProvision.ScopeExists(scopeName))

- {

- var clientScopeDesc = new DbSyncScopeDescription(scopeName);

- var clientTableDesc = SqlSyncDescriptionBuilder.GetDescriptionForTable(“ClientTable”, clientConn);

- clientTableDesc.GlobalName = “TestTable”;

- clientScopeDesc.Tables.Add(clientTableDesc);

- clientProvision.PopulateFromScopeDescription(clientScopeDesc);

- clientProvision.Apply();

- }

- // create the sync orchestrator

- var syncOrchestrator = new SyncOrchestrator();

- //setup providers

- var localProvider = new SqlSyncProvider(scopeName, clientConn);

- var remoteProvider = new SqlSyncProvider(scopeName, serverConn);

- syncOrchestrator.LocalProvider = localProvider;

- syncOrchestrator.RemoteProvider = remoteProvider;

- // set the direction of sync session to Upload and Download

- syncOrchestrator.Direction = SyncDirectionOrder.UploadAndDownload;

- // execute the synchronization process

- var syncStats = syncOrchestrator.Synchronize();

- // print statistics

- Console.WriteLine(“Start Time: “ + syncStats.SyncStartTime);

- Console.WriteLine(“Total Changes Uploaded: “ + syncStats.UploadChangesTotal);

- Console.WriteLine(“Total Changes Downloaded: “ + syncStats.DownloadChangesTotal);

- Console.WriteLine(“Complete Time: “ + syncStats.SyncEndTime);

- Console.WriteLine(String.Empty);

- Console.ReadKey();

- }

- }

- }

If you look at the code, its pretty much standard except for the server provisioning part. Notice also, that the table names differs in the client (ClientTable) and server (ServerTable) tables so we have to set the GlobalName to “TestTable” to settle for a common name.

The line :

var serverTableDesc = SqlSyncDescriptionBuilder.GetDescriptionForTable("ServerTable", serverConn);

simply retrieves the ServerTable structure as it is with columns id, Guid and TestColumn. In addition, GetDescriptionForTable would pick up that column Id is the PK.

Since we don’t need to sync the Id column to the client side, we simply remove it from the column collection using this line:

serverScopeDesc.Tables["TestTable"].Columns.Remove(serverScopeDesc.Tables["TestTable"].Columns["id"]);

We then tag the Guid column as the PK via this line:

serverScopeDesc.Tables["TestTable"].Columns["Guid"].IsPrimaryKey = true;

Looking at the scope_config entry, you’ll find that the Guid column is now tagged as the PK:

The tracking table also reflects that Guid is the PK:

So there you go, we retained the existing PK on the server table as it is. Inside the scope definition though, Sync Framework is configured to use the Guid column as the PK.

Give it a go and let me know how it goes.

Cheers,

JuneT

Works a treat – I really didnt know it would be so easy – Fantastic post! once again

How can i use this when I’m using a template?

Violation of PRIMARY KEY constraint ‘PK_Sync.serverTable_tracking’. Cannot insert duplicate key in object ‘Sync.serverTable_tracking’.

The statement has been terminated.

this is the error i got

i GOT IT ITS WORKING.

THANKS FOR THE POST..

Hi JuneT, nice post.. quick thought, how about the performance/scaling issue using GUID as the pk in the tracking. i recently switch the dataType of from GUID to ‘long’ (with clientId information encoded) and turns out the sync performance increase a lots. of course the drawback will be the extra encoding/decoding logic on the ‘long’ column.

the use of Guid is just to guarantee the uniqueness and simply using NewID() or NewSequentialID() to generate the key and not worry about other stuff. you can use a different key for as long as you can guarantee the uniqueness and you’re find writing extra code for encoding/decoding.

Hi June. Great Post.

We are currently building an occasionaly offline application using ADO.net 2.0 dataservices. The issue we have is the local cache database has primary keys that are identity enabled, also the corresponding table on the server is the same. Updates are fine, but my concern is an Insert that takes place on the client and isnt uploaded before an insert has taken place on the server. Obviously then causing a primary key violation.

I am at the stage where I can change the database structure… Question what is the best structure to use with sync framework.

i suggest you add a client id and combine that with your identity column as your PK.

Hi June,

Great post.

I have a requirement. I need to sync the data from multiple tables( for example, order and product are my tables in the source DB) to single table(table name is Destination). Please find the following points for my requirement

1. Need a specific set of columns(orderid, ordername) from the Order table and specific set of column(productid, productname) from the Product table.

2. Added the two tables in my server scope.

3. I have destination table with columns(orderid, ordername,productid, productname)

4. Need to sync the data from Source tables to destination table.

I tried adding the Souce tables and destination table in Server and Client provisioning. But while syncing the data i am getting error like

{“Violation of PRIMARY KEY constraint ‘PK__#4D2B3E9__5C66259B4E1F62D7’. Cannot insert duplicate key in object ‘dbo.@changeTable’.\r\nThe data for table-valued parameter \”@changeTable\” doesn’t conform to the table type of the parameter.\r\nThe statement has been terminated.”}

Please help me out on the same.

Regards

Arun Lal Prasanth

first, your error not necessarily sync related. its a sql error for primary key violation. you are trying to insert a row whose pk already exists.

second, you’re scenario is not supported. Sync Framework is more for replication/synchronization rather than data transformation.

assumng you can manipulate the change dataset to combine the columns from the two source table so you can map it to a single table, it would require you to always update both source rows in the source tables everytime you update one of the tables. for example, if the order table is updated and the product table is not, then your change dataset will have rows for orders table but no rows for products table, so you can’t merge them in the change dataset.

hi june,

Thank you for your reply.

Actually i have written some logic in the ChangesSelected remoteProvider. I am getting the whole datatable. I have 20 rows. As per my requirement, it rows should alwaus be divided by 2. (i.e. 20/2 is 10). So i am explicity creating the datatable with the same columns what i got in that 20 rows.

Now in my result datatable, i have 10 rows with all required data. Actually i have used LINQ for getting the datatable.

I have added the datable using

var results = from table1 in dt1.AsEnumerable()

join table2 in dt2.AsEnumerable() on (Guid)table1[“LeadId”] equals (Guid)table2[“LeadId”]

select new

{

LeadId = new Guid(table1[“LeadId”].ToString()),

Subject = (string)table1[“Subject”],

FullName = (string)table1[“FullName”],

Designation = (string)table2[“new_Designation”],

TestField = (string)table2[“new_Testfield”],

UpdateTimeStamp = (Int64)table1[“sync_update_peer_timestamp”],

CreateTimeStamp = (Int64)table1[“sync_create_peer_timestamp”],

UpdateKey = (int)table1[“sync_update_peer_key”],

CreateKey = (int)table1[“sync_create_peer_key”]

};

e.Context.DataSet.Tables[“Lead”].Rows.Add(results);

I am getting the error like

“Type of value has a mismatch with column typeCouldn’t store <System.Linq.Enumerable+d__61`4[System.Data.DataRow,System.Data.DataRow,System.Guid,f__AnonymousType0`9[System.Guid,System.String,System.String,System.String,System.String,System.Int64,System.Int64,System.Int32,System.Int32]]> in LeadId Column. Expected type is Guid.”

I need to know, is it the correctway to sync the datatable. Please help me on this.

Regards

Arun

as i have mentioned, this is not what Sync Framework is designed for. Sync Framework is for incremental changes – synching what has changed since the last sync. you mentioned you are selecting the entire database, then you are not retrieving incremental changes. if you just want to copy and split the source rows, you can do so without using Sync Framework.

as to you error, you are adding an enumerable when the expected parameter for Add is a Data Row, see: http://msdn.microsoft.com/en-us/library/5ycd1034(v=vs.80).aspx

Hi June,

Thanks for your posts. I have quite a unique situation it seems and have come up with a process using Sync to download specific “Event data” from a master database to Sql CE clients.

A scenario is as follows:

Step 1.)

A users launches a forms app in online mode and downloads needed lookup tables with their data and templates for filtering needed data (one time deal). In this process I define two scopes, one for lookup data (Lookup Scope) and another for event data. (Event Scope)

Step 2.)

The user then selects events from a grid view and downloads them to the sql ce datastore on their hard drive. This process creates a separate scope for each client identified by MACHINENAME_EVENTID the provisions the client and downloads the needed event data.

Step 3.) The user goes offline does their thing and comes back for Synchronization.

The problem that we are having is similar to what is described In Parts 1-3 of these posts. Recap, we our master Event Table uses Auto incrementing primary keys along with a few tables that we are inserting records in when creating events in offline mode. During the client provision process, the clients on the CE data stores also have integer auto incrementing keys inherited from the server. So when we insert a new record on the CE side they newly generated key is a primary for another record in the master database so during synchronization we are overwriting data. Very Bad.

So I need to come up with a way, very quickly to Update records for existing Events and Insert properly for new Events.

There is another layer of complexity, and Events has Opportunities (Opportunity table), Results (Results Table), and at least four other tables that records are being inserted into during the Event creating process that are also getting collided with.

Suggestions?

I can post some code if you’d like to take a closer look.

-F

Hello Again June,

I applied the method you suggested in the post and I have proved it in a test project, about to update our application to work with GUIDS instead of pk’s. Thanks for the post. -F

Great article. But I have one question on above implementation. How would we handle the foreign keys that have primary key as identity field.

its a tricky one, but if you store the original pk, then you can do a lookup during the sync of the child table to lookup the new pk value of the parent table..

Hi June, thanks for this article and your comments. I decided to go down this route and invested quite some time into changing my Sql-compact clients – to Sql server app syncronising over WCF. I am stuck with this problem from Vipul’s question though – I have FK that I’ve excluded locally from the CE db and when the changes reach the server they are committed in one bulk transaction – both tables (the primary and the dependant one with the FK) have their records inserted together and both the Applying Changes and Changes Applied events are fired for the bulk changes and not per row or table. Therefore there is no way to find out what the newly generated server identity PKs are and use them for the child records.

So how do I do a lookup during the sync of the child table? Do you mean that I should have 2 separate scopes and sync the second with the child table(s) after the first one has finished so that I can have access to the new PKs in the Applying changes event handler?

Thanks a lot,

Bogdan

2 scopes will be the easiest approach

Continuing Vipul’s question, I’m quite new to this. I’ve taken this approach not because my keys are different but as I have identity int PK in each table I was needing a GUID to avoid id collision. How could you take advantage of the approach you proposed to Vipul’s question? How can I rebuild the relationship as Id’s changed. Thanks!

Hi Tomas,

what’s your clients PK and FK? and what’s the destination PK and FK look like after you added the Guid?

Actually what I’m trying to accomplish is to sync two DBs bidirectionally. Both schemas are the same, but the problem is that all the tables PK’s are identity ints. So I took this path of adding a GUID SyncId for synchronization. But when it tries to insert a parent it stills passes the old FK int id which should be replaced with the new one assigned at the moment of insertion.

so you generate a new guid for every row? you might want to share exactly what you did. perhaps post it in the forums.

hey nice tutorial. but am getting a problem. when i make any change in client table and do sync, it does not sync it with server table. means uploading is not done. Can you tell me what could be the problem? Thanks

try enabling tracing or subscribing to the ApplyChangeFailed event to get more information on what’s failing…

I have subscribed ApplyChangeFailed event but it does not call/ or show anything. I just getting failure notification form this “syncStats.UploadChangesFailed”

Actually I have 2 tables ( on client and server sides) one table is synchronizing perfectly but other one that is client table is not uploading data to servrtable

ok i just got the problem and that is “Cannot insert the value NULL into column ‘id’, table ‘SyncDB.dbo.ServerTable’; column does not allow nulls. INSERT fails. The statement has been terminated” Can you please guide me,how could I resolve this problem? i have to upload data from client to server table.

I have a table with identity column as primary key. To sync table I use GUID for uniqueness on both server and client. But i want to leave identity column as primary key (especially on client.). The goal is identity column has to be the same unique value on all clients and server. When a new row is inserted on one client, it will get new GUID value which is globally the same and new identity value which is unique only on that client. When synchronization is take place, changes are uploaded first to the server. The server have GUID as primary key and new row will be inserted with the same GUID value from the client but identity value may not be the same. It will be decided by the server at that time. Here is the question. After uploading changes to server is completed, will changes on identity column be downloaded to the original client again? If it is possible, how we can accomplished? Thanks

how do you ensure the client identity value is unique across all clients? do you partitioned the values? if you’re getting a new identity value in the server, the client doesn’t know that, so it might sync those records as new records.

also, i don’t understand how you’re setting the PKs as per your question above. What are the actual PKs on the tables and what are you setting as PKs in Sync Fx?

Thanks for your reply. In my case, actual PKs on the tables is identity column (example CustomerID in Customer table). Because this key is referenced by other table as foreign key we can’t leave identity column on client machine(example CustomerID On SaleOrder table). In your article why you leave id column (which is primary key on ServerTable) on ClientTable. Is it possible to synchronize including this identity column on all clients and server.

i think you miss the point of the post, it’s about the tables having different PKs and in the scenario above, only the server has an Identity column which is fine because you only have one database generating identity values.

in your case, it’s your clients generating identity values. unless you’re partitioning the values (e.g. client 1 = 1-1000, client 2 = 1001 – 2000, etc…), your client identity values will have collision when you put them on a single store (your server). if you’re client 1 produces PK identity value 1 and client 2 does the same, how do you differentiate them?

In my scenario, I have 2 client databases and one server database, structure is same for all. Clients send data to server periodically and receive data from server. Clients may increase in future. I am using MS Sync framework for database syncing purpose. Most tables have identity columns as primary keys. When data goes to server from different clients with same ids it is not synced properly. Then I added a new column Client_ID (GUID) to tables to solve the primary key issues. Also tables are using foreign keys, that are identity columns in parent tables. I don’t know how to deal with FKs as they are different on client and should be different on server . Please advise me how to resolve the foreign key issues.

there is no easy way to solve that. it’s an issue with the database design using identity columns and being consolidated in a central store. i suggest you modify your tables and pks/fks instead not to rely on identity columns for PK.

Thanks for the great article. Based on your sample tables, is it possible to have the id column on client table as well (not as PK or auto-generated – just as a normal integer column).

No updates are done to the id column on the client side. When a bi-directional synchronization (UploadAndDownload) is done, I want the id column on the client side to get updated values from Server table. Is it possible to achieve this programmatically without modifying the stored procedures that are generated?

try creating two separate scopes, one for download, and one for upload. for upload, dont include the id in the scopes. for download, include the id in the scopes.

[…] SYNCHRONIZING TABLES – Part 1, 2 and 3 […]

Could you help me with this problem

I am trying to use Microsoft Sync Framework for syncing 2 tables. I am using SyncOrchestrator to synchronize the two tables.

The problem is Employees has a field cate_id that has the id of the category(this is a FK to categories) and Employees2 has a field called Cat and this is storing the name of the category in the field directly so(is not a fk), if you see one table has id the other the name directly,

How could I sync those tables? any advice?

am not sure exactly what the problem is. what is the final sync outcome that you require? are you synching Employees with Employees2?

hello june,

i want to sync server database to client database with all table dynamically like using windows services is it possible ? if yes then how?